December 2023

Our data lake was a mess. It was set up by consultants a few years ago and had all sorts of inefficient design choices. Data was stored uncompressed as CSV files. Data Factory was being used for transformations that should’ve been done with Python. And the Power BI template was overly complicated, difficult to develop on, and extremely slow. As a result of all this, queries in Power BI were taking forever to run. Analysts were complaining, and we were tired of dealing with the poor design; it was time for a change.

I’d been hearing about lakehouses for a while and thought this could be the solution we needed. Lakehouses offer a lot of advantages over traditional data lakes, like ACID transactions and options for improving query performance. The advantage for us, as well, is they allowed a lot of our infrastructure to remain similar, while still providing big benefits.

I did some research and found that Iceberg and Delta were good options. I presented my findings to my manager, who was on board and suggested I delegate some of my other tasks to junior team members so I could focus on this project.

To figure out which lakehouse solution was the best fit, I built a small proof of concept for both Iceberg and Delta. Delta came out on top, with faster loading and query times. Plus, it integrates seamlessly with Databricks, which we were already using. It was a no-brainer.

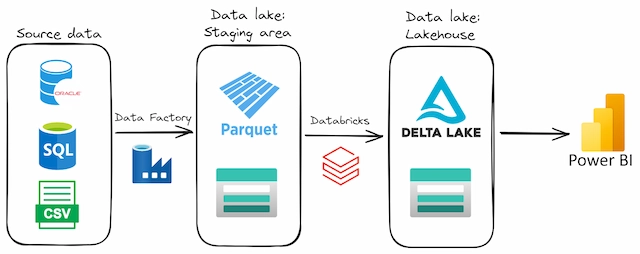

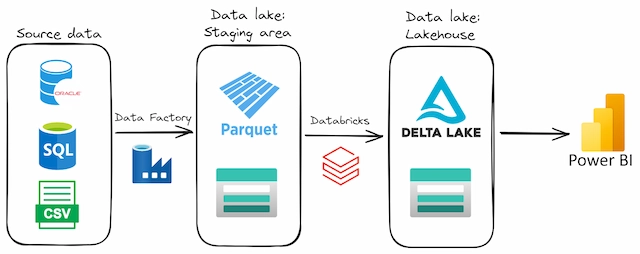

I drew up a draft of what I thought the data infrastructure should look like:

And now onto building it, which I won’t bore you with all the details. I set up storage accounts, Data Factory instances, added integration runtimes, set up a new Databricks workspace, added Unity Catalog, used System-assigned Managed Identities to give the various pieces the permissions to interact with each other.

Before this project, we used Data Factory to extract data from source systems. I thought about moving everything to Databricks, but testing showed that Data Factory was faster and more cost-effective for moving data from databases. So, I decided to stick with Data Factory for data extraction.

For transformations, Databricks was still the way to go. I played around with other options, like using Azure Functions to run Python code serverless and Azure Container Apps for serverless containers. While these options showed promise, I ultimately chose to stick with Databricks because it was cost-effective when running on job clusters and offered a simplified setup.

With a solid proof of concept in hand, it was time to present the Delta lakehouse solution to the board that oversees major decisions related to our data and ICT infrastructure. Our team put together a presentation highlighting the performance stats, benefits over the old infrastructure, cost savings, and any potential risks associated with making the switch.

The results spoke for themselves: queries ran multiple times faster, data loading was streamlined, ACID transactions reduced the risk of data loss or corruption, data loading costs were reduced by a factor of 10, and the Power BI template I developed greatly simplified and sped up querying for analysts.

Designing and developing a Delta lakehouse was the solution we needed to tackle the performance and efficiency issues plaguing our existing data lake (or data swamp). By using a modern format like Delta, and a well-thought-out architecture, we delivered significant improvements in query speed, data integrity, and cost-effectiveness, and ultimately saved cost directly, as well as through saving our team and analysts time.